“To err is human, to forgive divine” — Alexander Pope

Somebody had blundered. It’s a truism of most organizations and most human initiatives. It’s especially true in wartime. Alfred Lord Tennyson stamped the phrase indelibly into the English language in his poem “The Charge of the Light Brigade”: Forward, the Light Brigade!” Was there a man dismayed? Not though the soldier knew Someone had blundered. Theirs not to make reply, Theirs not to reason why, Theirs but to do and die. Into the valley of Death Rode the six hundred.

In Tennyson’s poem, the soldiers charged heroically to their death even though they knew incompetence in the higher ups doomed them to instant death. The historian Paul Fussell in Wartime, his study of what life was actually like for soldiers during World War II, has a whole chapter entitled “Someone had blundered,” and in it he identified key reasons these mistakes happened: ignorance, fear, overconfidence, and “Bright Ideas” (that usually turn out to be counterproductive or even disastrous). Blundering is an inescapable part of human existence.

Blundering is also an important part of many games. Tic Tac Toe, for example, is pointless unless someone blunders. Someone can only win if the other person makes an error; otherwise the match ends in a cat’s game, a tie. Similarly, a game of chess often hinges not on the brilliant move but on the blunder, the mistake that leaves a piece in danger or a king under attack. Given the complexity of chess, the outcome of almost all games depends on this happening. It is the reason that Edgar Allan Poe in his short story “The Murders in the Rue Morgue” argues that in chess “where the pieces have different and bizarre motions, with various and variable values, what is only complex is mistaken (a not unusual error) for what is profound.” Because of this, he asserts that draughts (checkers) or whist are superior games from the analytical perspective, because they are less prone to blundering, more amenable to pure reason.

Whatever we might think of Poe’s argument—and as an avid chess player I would take issue with it—there’s little doubt that the blunder is an essential part of play in chess, as in many other games. Indeed, it has its virtues. A blunder can let a weaker player beat a stronger player. A blunder adds emotional intensity to the match—the beneficiary savors the unexpected triumph, the blunderer feels the pain of the loss even more keenly, knowing he or she was not outplayed but instead gave it away (or at least that’s the narrative one tells oneself).

I myself have found this to be true when I play chess. While I’m a fairly good player, I dislike playing computers, because most software programs these days don’t make obvious mistakes. Their ability to calculate numerous moves in advance means they can only be beaten with superior strategy that is beyond the ken of most players.

Programmers of chess software have recognized this, and so they design their programs to sometimes select a weaker move over a stronger move or even make an intentional mistake. The popular online chess program Chess.com, for example, offers a range of different artificial intelligences of varying abilities and playing styles such as Elani who “always keeps a straight face, even when she loses a piece. Was it a blunder or a sneaky sacrifice?” By making the program feel more like playing a flawed and fallible human, the play is potentially more interesting.

Rochester, New York.

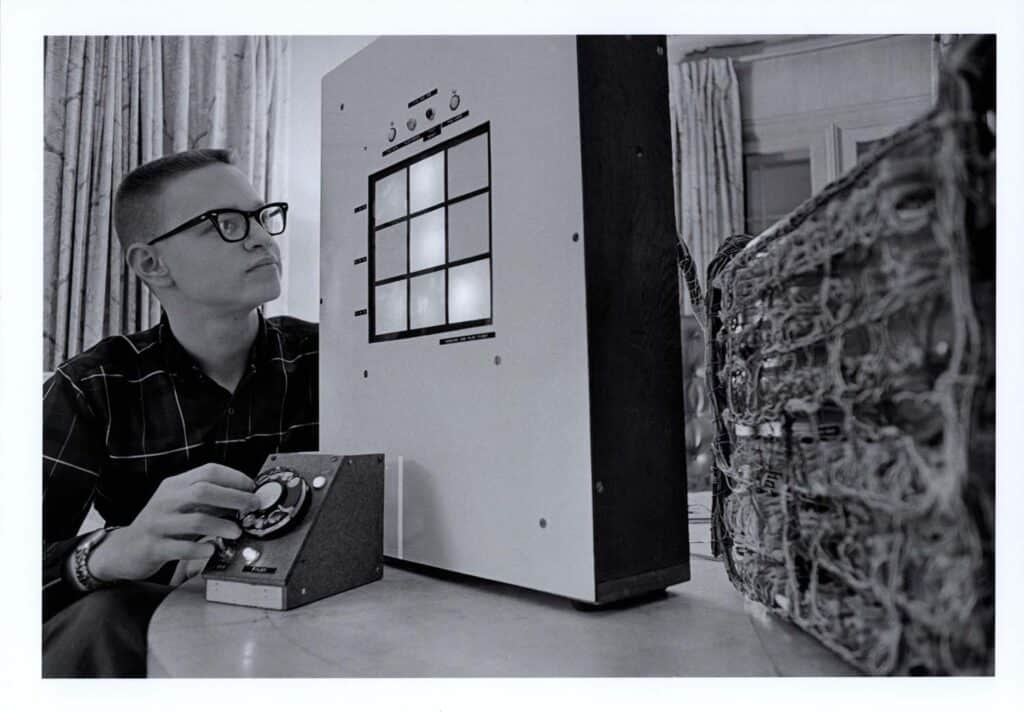

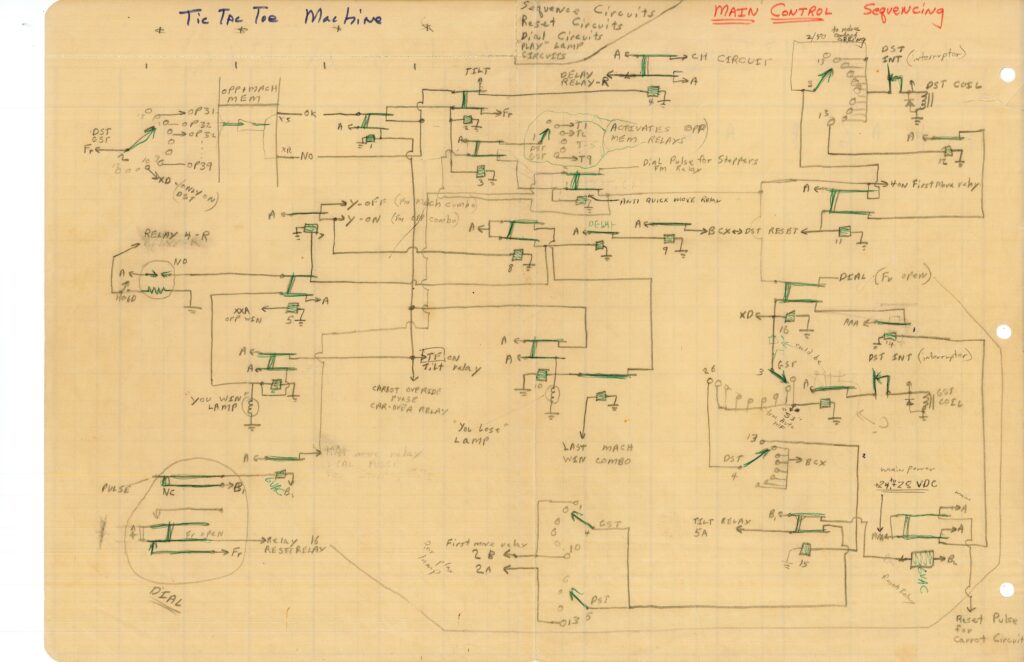

Programmers have been introducing this sort of algorithmic stupidity into game playing programs for a long time. At The Strong we have an ingenious tic-tac-toe machine designed in 1963 by high schooler Jeff Mattox from hundreds of telephone relays. He quickly realized that a game with a competent machine that always resulted in a tie would not be very interesting, so he programmed it to occasionally make a wrong move (what he called a “carrot”), so that on average it would lose every eighth game.

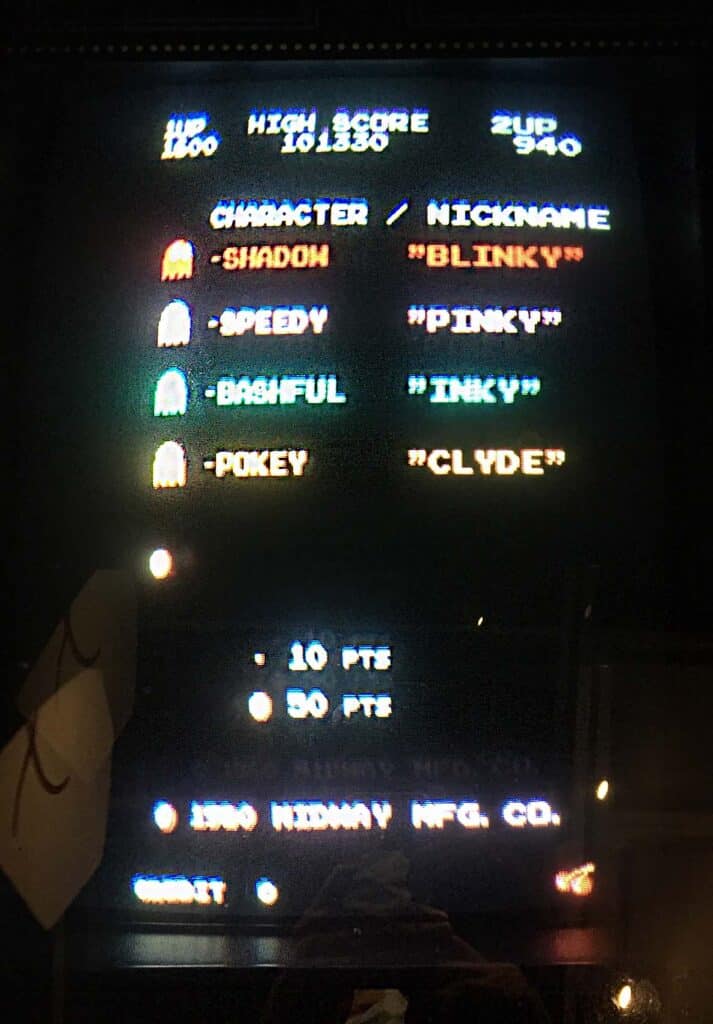

Later video games do this as well, using a variety of means to help computers make choices that might not always be the perfect path at that moment but that make the game more fun. Pac-Man, for example, achieves this by giving each ghost its own logic, which in effect gives each character a distinct, though subtle personality.

The logic of the ghosts is fairly sophisticated considering the game was released in 1980, but at its simplest level the schema goes like this:

Red Ghost—go towards player

Pink Ghost—aim for two spaces in front of Pac-Man

Blue Ghost—move towards a position calculated based on the location of the Pink Ghost and Pac-Man—the effect is to make its movement seem somewhat random

Orange Ghost—go after Pac-Man but run away if it gets too close

What’s above is an oversimplification as there are different modes the ghosts can be in depending on whether they’re attacking Pac-Man, running away from him, or simply scattering to different corners of the screen. At times, these movements seem ridiculous—like blunders—but the overall effect is to make the game less predictable and more enjoyable.

And yet the ghosts are just following an algorithm and have no agency of their own. In that case can they be considered to have blundered? That raises the question of what it means to blunder, and that got me thinking about the word’s history. So I checked out its etymology in the Oxford English Dictionary which gives examples of the word’s use going back to the 14th century when Geoffrey Chaucer used it in the sense of “to move about blindly, stupidly.” It turns out its exact origin beyond this is uncertain, though there is an even older Icelandic word blonda that means to doze and a Swedish word blunda that means to shut the eyes.

So, can a computer blunder? When I began writing this I thought no, that there needs to be a level of consciousness to the blunderer, that a computer cannot have known better or calculated differently because it is a prisoner of its programming. And yet, if we remove the burden that a computer need have consciousness and intent and simply think of it as moving blindly or stupidly in the Chaucerian sense, then yes, I think a computer can blunder, even if the programmer deserves the blame, or in the case of a game, the credit. After all, who wants to play a perfect machine?

By Jon-Paul Dyson, Vice President for Exhibits and Director of the International Center for the History of Electronic Games

Hours 10 a.m.–5 p.m. | Fri. & Sat. till 8 p.m.

Hours 10 a.m.–5 p.m. | Fri. & Sat. till 8 p.m.